SAS Enterprise Miner (EM) is indeed a fancy tool for a SAS programmer who wants to switch to the field of data mining. It is like the point-and-click camera: you drag several nodes onto the diagram, run it and everything is settled. And I was quite impressed by the resourceful reports and figures automatically generated by SAS EM. Then I spent several months to learn it, passed a SAS certified exam, and finished some pet projects on it. You don’t even need to know anything about statistics to be a data miner. Does that sound too cool to be true?

Some barriers exist for implementing SAS EM in reality. First consideration is the price. SAS charges about 30k for a retail license (I am not sure about the exact price). Only those fat-cat companies, like AT&T, could boast their UNIX-TERADATA-SAS EM complex. Many mid-size or small-size companies can hardly to afford it: they have to do data mining in their own ways with or without SAS. Second is about the installation and maintenance. Since I was taking a training class, I can purchase a license of all SAS products during limited time with a reasonable price. Then I tried numerous times to install SAS EM on my computer and failed. None of my classmates succeeded neither. SAS customer service told me that the standard strategy is to hire a $50k-a-year system engineer, trained by SAS, to install SAS Enterprise Miner properly. Of course, it adds more to the total cost of SAS EM. Third, the running process is contained in a black box. Each time SAS EM would invoke a number of procedures from SAS foundation, but no extra information is available in the log except whether it is a success or failure. For those SAS programmers who tend to check log to find ‘note/warning/error’ message, it is painful experience. I used to run the same process in two computers installed with SAS EM and had distinctive results. Everything was identical, and therefore I could not figure out why I had different results. Fourth, SAS EM is not that efficient. SAS EM applies J2EE to realize the cross-platform feature, which lags the execution time. Data and statistics have to pass through SAS foundation, J2EE until the XML layer of SAS EM. The time to run big data, say more than 100MB data, in a mainstream computer with SAS EM is going to be very long, sometimes intolerable.

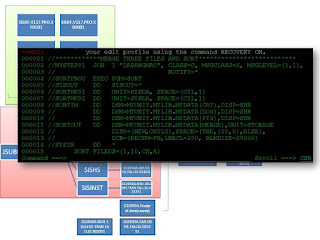

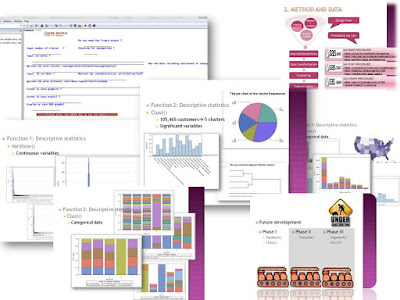

Actually, SAS foundation, mainly SAS/BASE and SAS/STAT, is good enough for routine data mining jobs (some procedures may need the license of SAS Enterprise Miner). For example, to emulate the cluster node in SAS EM, we probably have a number of options, such as Proc Cluster, Proc Fastclus, Proc Aceclus, Proc Distance and Proc Tree; to do dimension reduction by PCA or FA, Proc Prinqual, Proc Princomp and Proc Factor are ready to use; to build a decision tree, using Proc Arboretum directly may provide more flexibility. Almost all functionality in SAS EM could be rendered in SAS 9.2. As for the graphics, the SG procedures are handy tools to customize the output images. Macro is a good choice for companies which may need to encapsulate and reuse their codes. If a GUI is desired, Dr. Fernandez in his book suggested using the compiled macros. Besides, we have chance to see what the interim datasets look like.

Still a SAS data miner should be a person who knows statistics. She/he should understand the underlying theory or algorithm; she/he should know how to pick and combine the appropriate procedures corresponding to specific tasks; she/he also should know how to do the dirty work to code and get job done. Overall, the data mining needs to generate not only fun but also meaningful results.

References: George Fernandez. Statistical Data Mining Using SAS Applications, Second Edition (Chapman & Hall/CRC Data Mining and Knowledge Discovery Series). CRC Press.

/*CALL A GUL TO INPUT THE VARIABLES*/

%window mycluster irow=3 rows=33 icolumn=2 columns=60 color=YELLOW

#1 @15 " Cluster Analysis" color=red attr=underline

#4 @3 "1. Input the SAS Data set name?" color=blue

#5 @7 "EX: SASHELP.CARS " attr=rev_video

#6 @7 data 30 required=yes attr=underline

#4 @40 "2. Exploratory graphs ?" color=blue

#5 @44 " options: YES " attr=rev_video

#6 @44 explor 20 attr=underline

#7 @3 "3. Input number of cluster ?" color=blue

#8 @7 " EX: 5 " attr=rev_video

#9 @7 num 20 required=yes attr=underline

#7 @40 "4. Checking for assumptions ?" color=blue

#8 @44 "options: YES " attr=rev_video

#9 @44 assump 10 attr=underline

#10 @3 "5. Input clustering variable(s) ?" color=blue

#11 @7 "EX: make MSRP " attr=rev_video

#12 @7 mpred1 80 required=yes attr=underline

#13 @7 mpred2 80 attr=underline

#13 @90 "contin var" color= blue

#14 @3 "6. Any optional PROC CLUSTER options?" color=blue

#15 @7 " EX: method=ave method=cen method=war k=5 trim=5 " attr=rev_video

#16 @7 clusopt 80 attr=underline

#17 @3 "7. Input ID variable ?" color=blue

#18 @7 " EX: id " attr=rev_video

#19 @7 ID 30 attr=underline

#17 @40 "8. Variable standardization method ? " color=blue

#18 @44 prin 30 attr=underline

#20 @3 "9. Folder to save output?" color=blue

#21 @7 "EX: c:\ " attr=rev_video

#22 @7 output 80 attr=underline

#23 @3 "10. Folder to save graphics ?" color=blue

#24 @7 "EX: c:\ " attr=rev_video

#25 @7 dir 80 attr=underline

#29 @3 "11. Display or save SAS graphs?" color=blue

#30 @7 graph 20 required=yes attr=underline

#33 @10 " USE THE ENTER KEY TO RUN THIS MACRO(don't hit the submit key)

"color=red attr=rev_video;

%display mycluster;

/*INVOKE THE MACRO USED IN THE GUI*/

libname mylib base "d:\macroproject\cluster";

options sasmstore=mylib mstored;

%mycluster