The challenges for SAS in Hadoop

For analytics tasks on the data stored on Hadoop, Python or R are freewares and easily installed in each data node of a Hadoop cluster. Then some open source frameworks for Python and R, or the simple Hadoop streaming would utilize the full strength of them on Hadoop. On the contrary, SAS is a proprietary software. A company may be reluctant to buy many yearly-expired licenses for a Hadoop cluster that is built on cheap commodity hardwares, and a cluster administrator will feel technically difficult to implement SAS for hundreds of the nodes. Therefore, the traditional ETL pipeline to pull data (when the data is not really big) from server to client could be a better choice for SAS, which is most commonly seen on a platform such as Windows/Unix/Mainframe instead of Linux. The new PROC HADOOP and SAS/ACCESS interface seem to be based on this idea.

Pull data through MySQL and Sqoop

Since SAS 9.3M2, PROC HADOOP can bring data from the cluster to the client by its HDFS statment. However, there are two concerns: first the data by PROC HADOOP will be unstructured out of Hadoop; second it is sometimes not necessary to load several GB size data into SAS at the beginning. Since Hadoop and SAS both have good connectivity with MySQL, MySQL can be used as an middleware o communicate them, which may ease the concerns above.

On the Cluster

The Hadoop edition used for this experiment is Cloudera’s CDH4. The data set,

purchases.txt is a tab delimited text file by a training course at Udacity. At any data node of a Hadoop cluster, the data transferring work should be carried out.MySQL

First the schema of the target table has to be set up before Sqoop enforces the

insert operations.# Check the head of the text file that is imported on Hadoop

hadoop fs -cat myinput\purchases.txt | head -5

# Set up the database and table

mysql --username mysql-username --password mysql-pwd

create database test1;

create table purchases (date varchar(10), time varchar(10), store varchar(20), item varchar(20), price decimal(7,2), method varchar(20));Sqoop

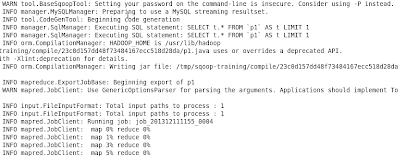

Sqoop is a handy tool to transfer bulk data between Hadoop and relational databases. It connects to MySQL via JDBC and automatically creates MapReduce functions with some simple commands. After MapReduce, the data from HDFS will be persistently and locally stored on MySQL.

# Use Sqoop to run MapReduce and export the tab delimited

# text file under specified directory to MySQL

sqoop export --username mysql-username --password mysql-pwd \

--export-dir myinput \

--input-fields-terminated-by '\t' \

--input-lines-terminated-by '\n' \

--connect jdbc:mysql://localhost/test1 \

--table purchasesOn the client

Finally on the client installed with SAS, the PROC SQL’s pass-through mechanism will empower the user to explore or download the data stored in MySQL at the node, which will be free of any of the Hadoop’s constraints.

proc sql;

connect to mysql (user=mysql-username password=mysql-pwd server=mysqlserv database=test1 port=11021);

select * from connection to mysql

(select * from purchases limit 10000);

disconnect from mysql;

quit;